ReliAPI

December 4, 2025•0 comments

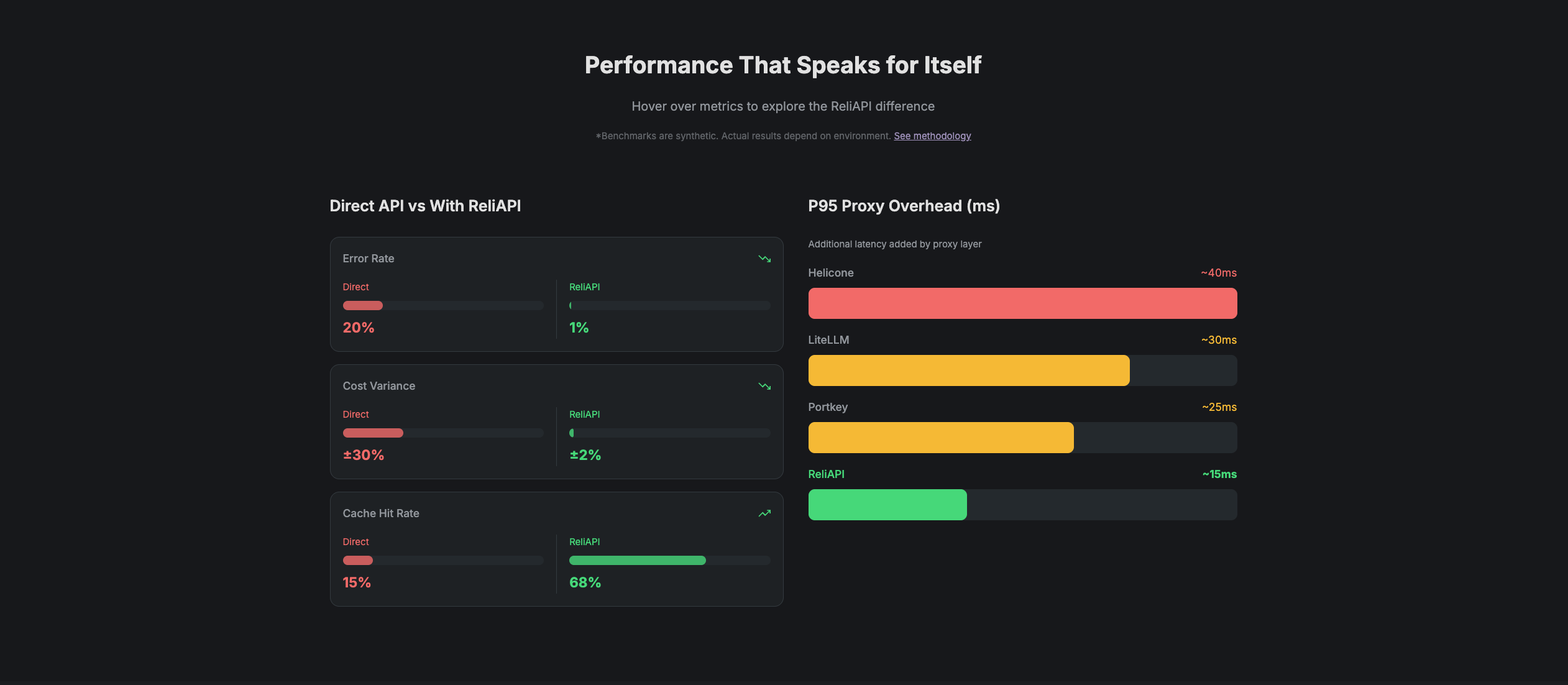

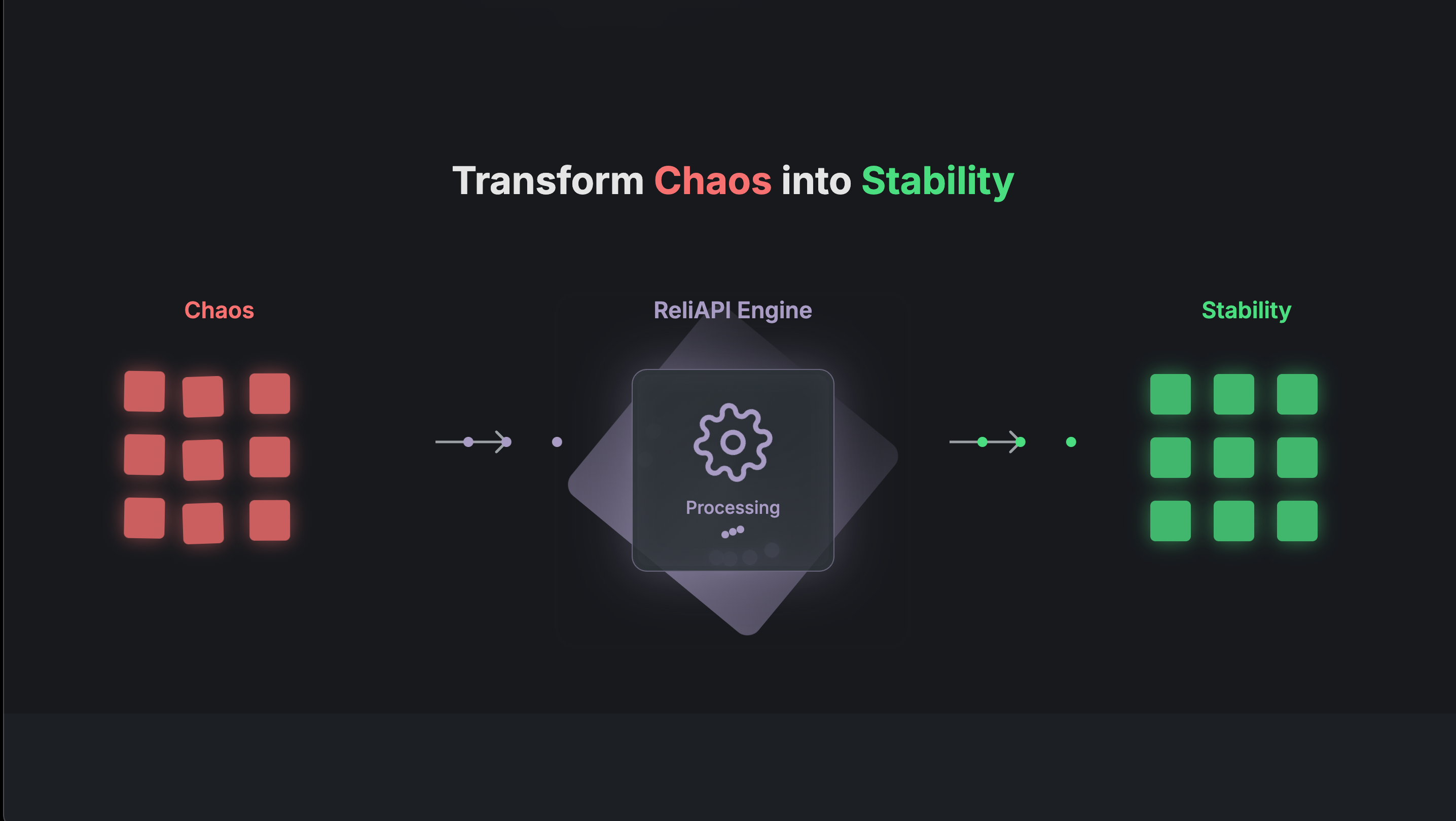

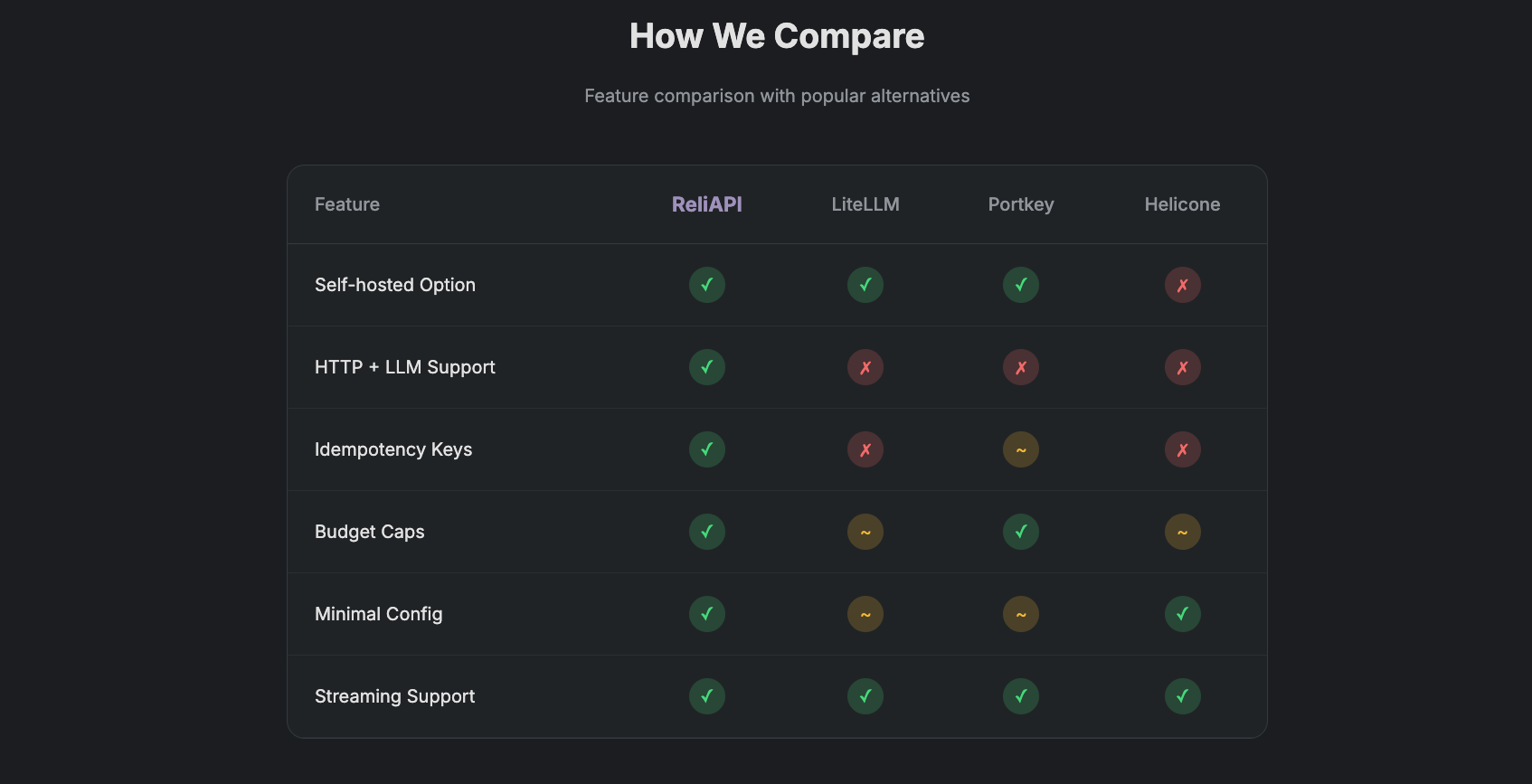

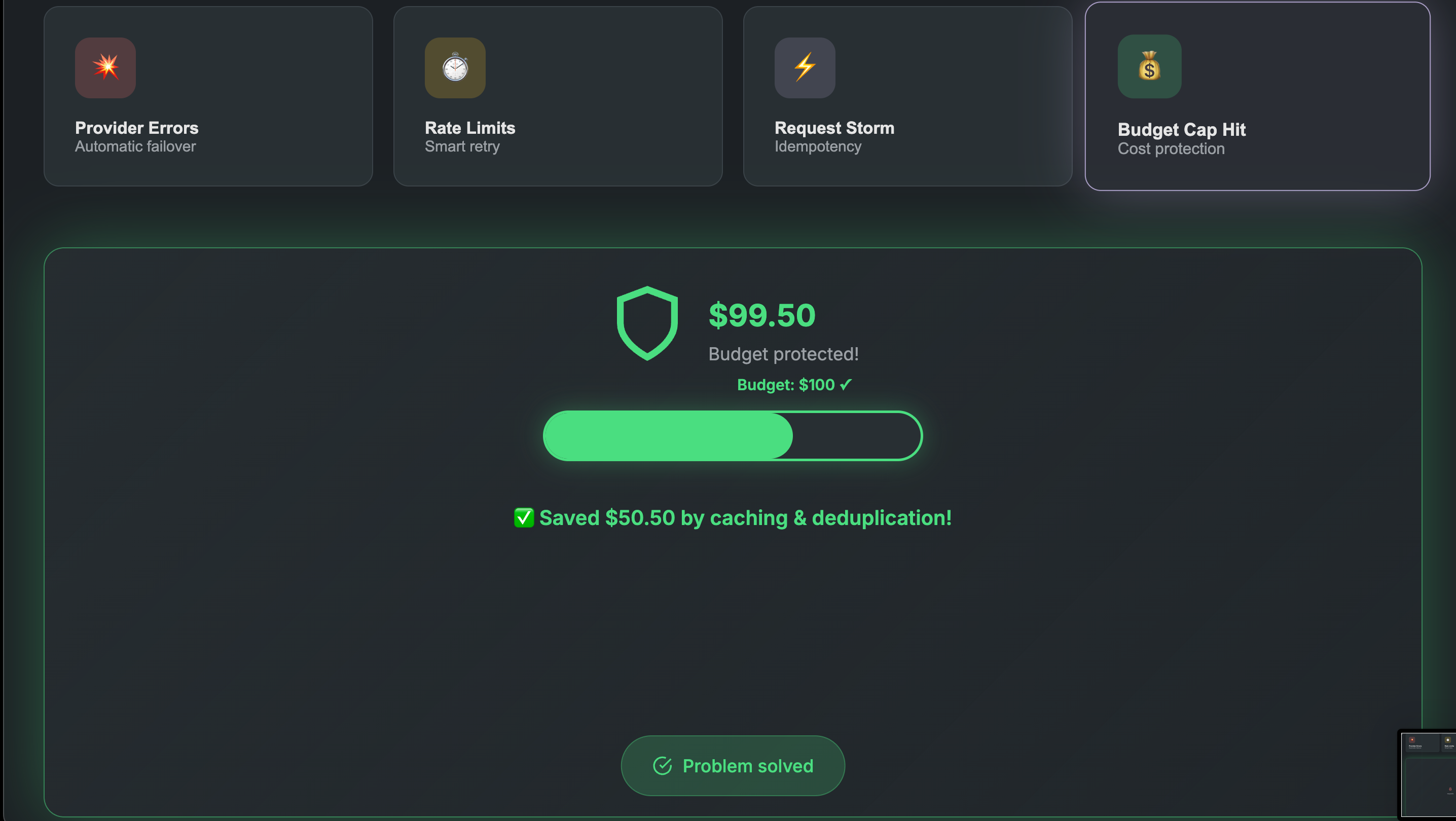

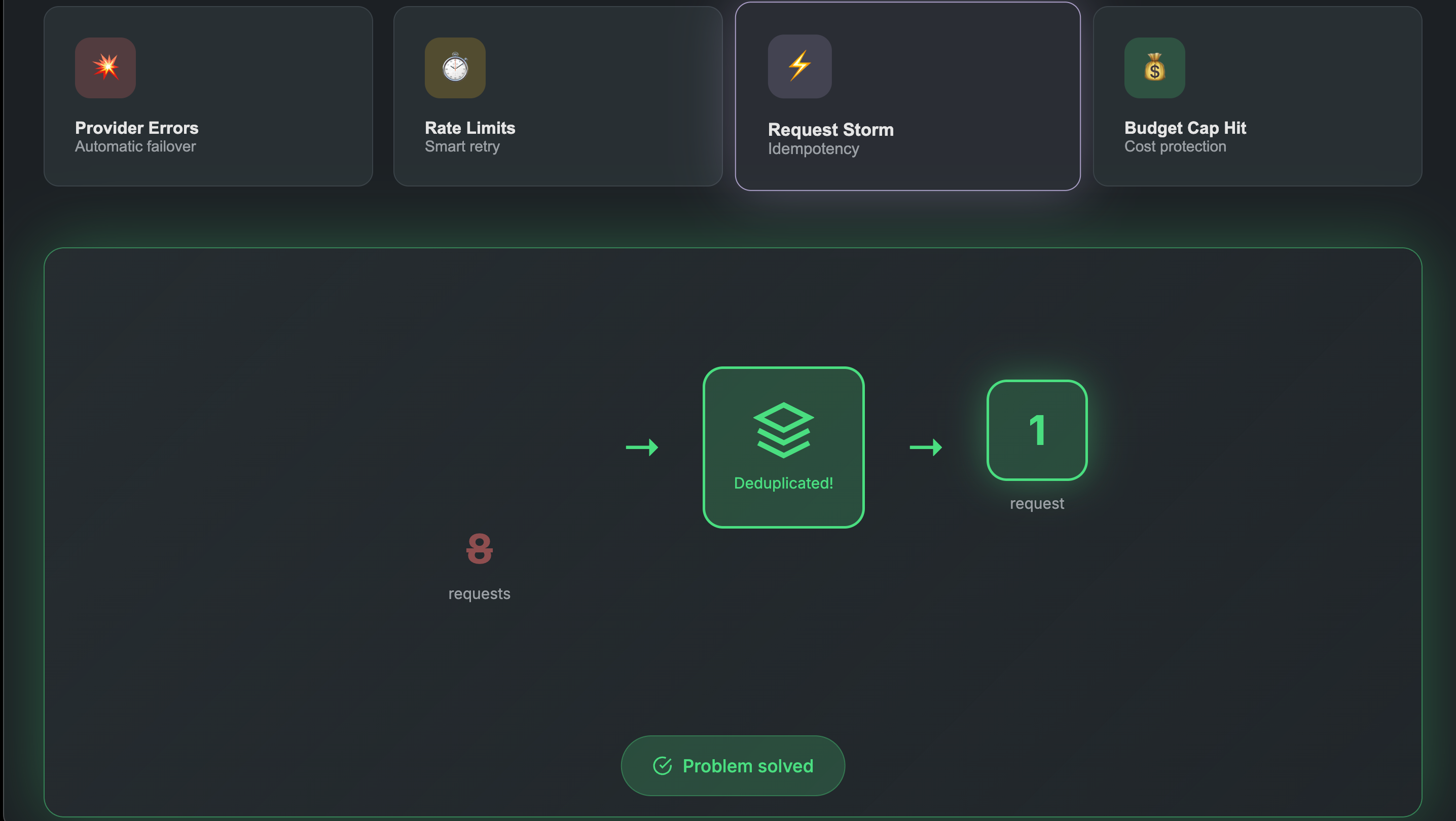

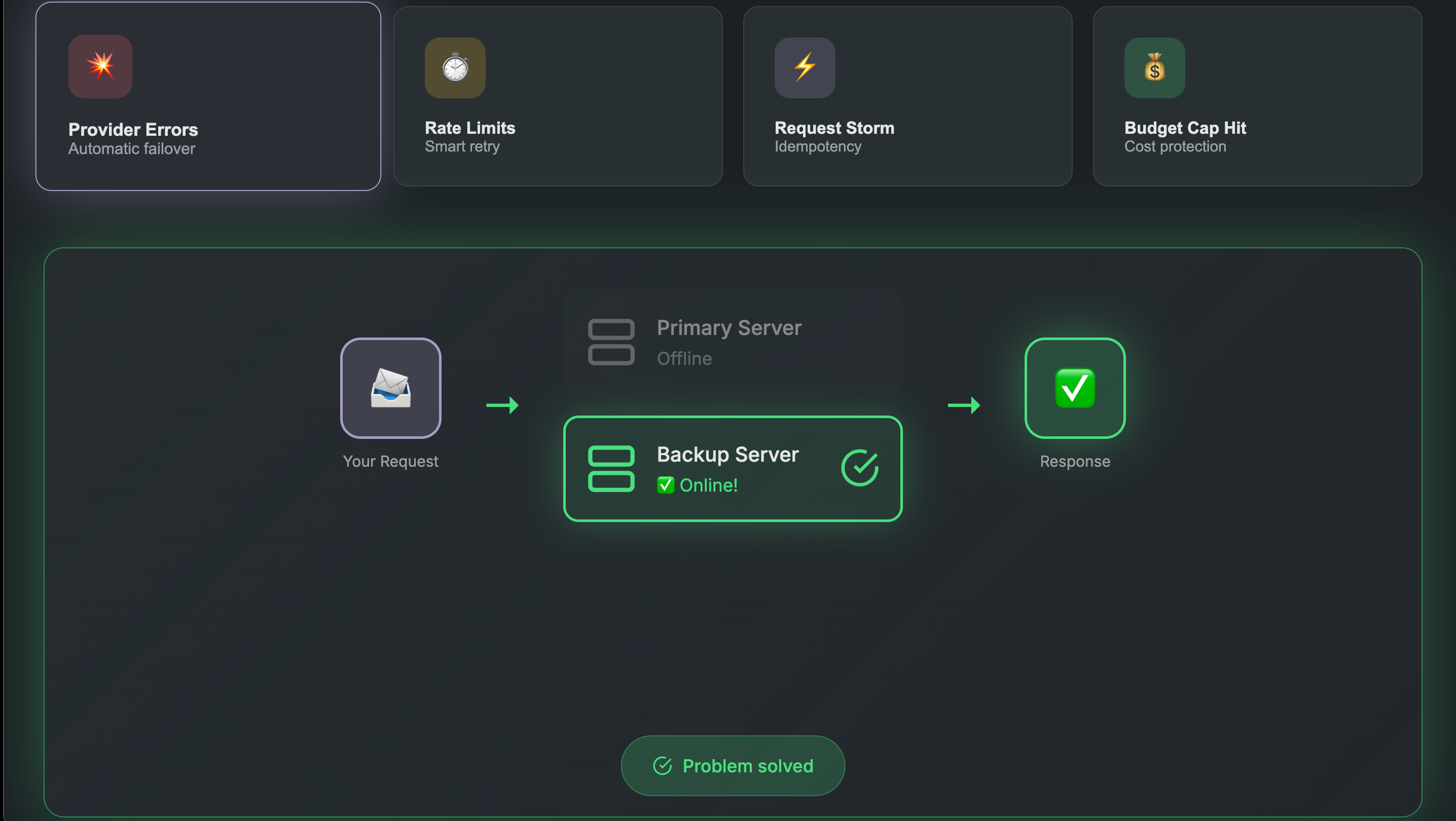

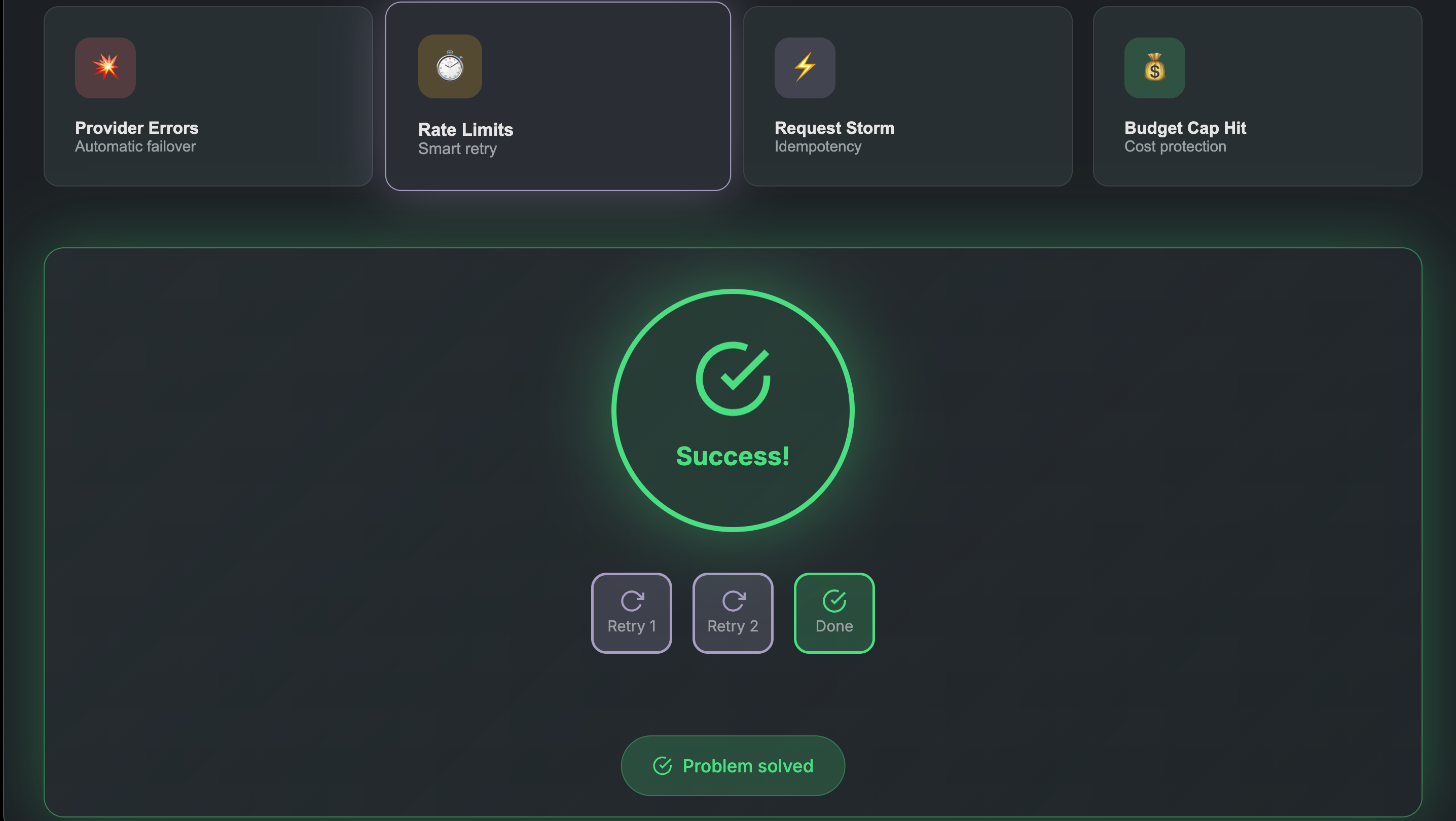

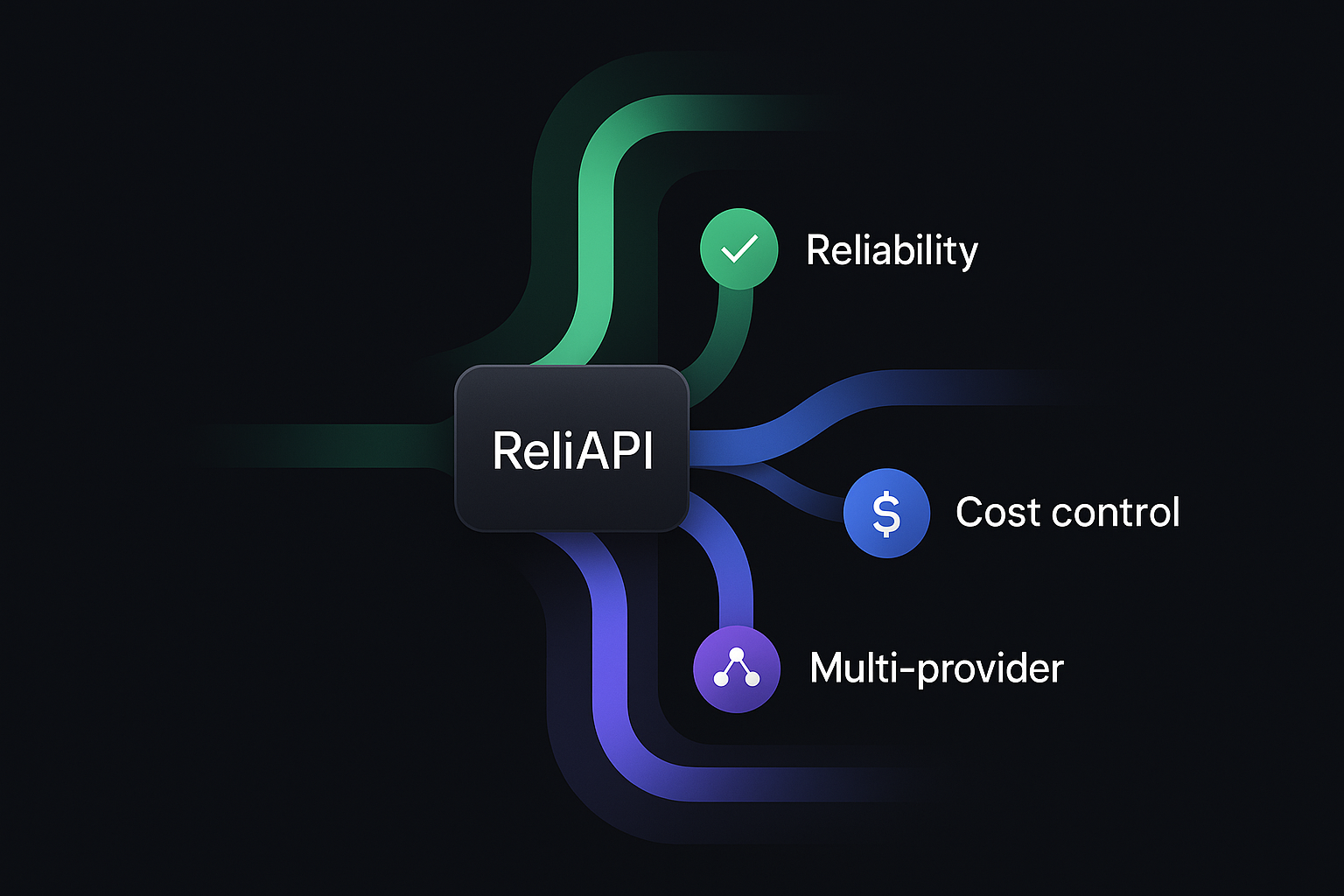

Unlike generic API proxies, ReliAPI is built specifically for LLM APIs (OpenAI, Anthropic, Mistral) and HTTP APIs. Key differentiators: • Smart caching reduces costs by 50-80% • Idempotency prevents duplicate charges • Budget caps reject expensive requests • Automatic retries with exponential backoff & circuit breaker • Real-time cost tracking for LLM calls • Works with OpenAI, Anthropic, Mistral, and HTTP APIs • Understands LLM challenges: token costs, streaming, rate limits Use from RapidAPI

Project Images